2016

CIID master thesis

Objectifier empowers people to train objects in their daily environment to respond to their unique behaviors. It gives an experience of training an artificial intelligence; a shift from a passive consumer to an active, playful director of domestic technology. Interacting with Objectifier is much like training a dog - you teach it only what you want it to care about. Just like a dog, it sees and understands its environment.

With computer vision and a neural network, complex behaviours are associated with your command. For example, you might want to turn on your radio with your favorite dance move. Connect your radio to the Objectifier and use the training app to show it when the radio should turn on. In this way, people will be able to experience new interactive ways to control objects, building a creative relationship with technology without any programming knowledge.

Build test repeat

a 8 weeks of design process

Could machine learning be a way of programming? This was one of my initial research questions. Why do we learn the language of the machine, when the machine could learn to understand ours? How would we interface “teaching” in the physical space and how would the machine manifest.

As a designer, I was interested in the future of the language and relationship between machine and human, but as a maker, I wanted to bring the power of machine learning into the hands of everyday people.

The concept is called: "Spatial Programming" – A way to program or rather train a computer by showing it how it's done. When the space itself become the program, then the objects, walls, lights, people and actions all become functions that are part of the program. When being present in the space the functions can be moved and manipulated in a physical and human way. The spatial manifestation of the programming language opens up new and creative interaction without the need of screen or single line of code.

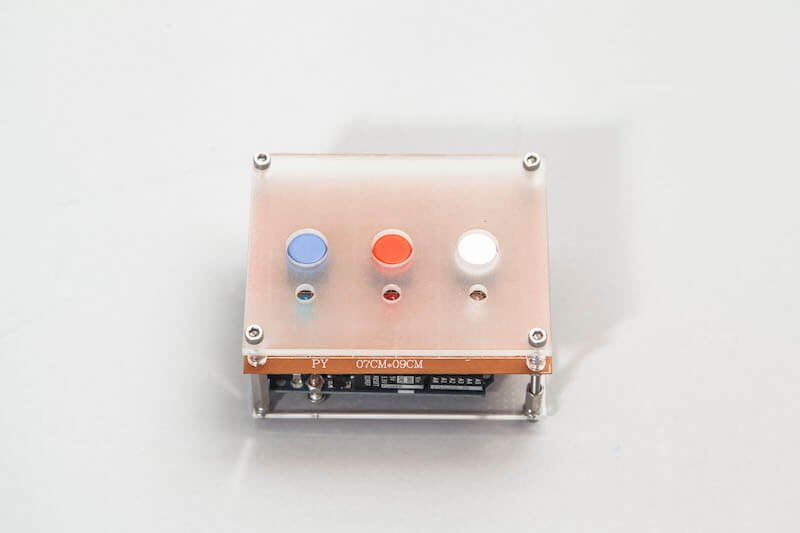

Prototype 1 – Pupil

A physical interface for the machine learning program “Wekinator”. It served as a remote control to explore different ideas. Pressing red or white records data.

Blue toggles the neural network to process the data and run the feedback. Later it became a prop to talk to dog trainers about the physical manifest of machine learning.

“Soon We Won’t Program Computers. We’ll Train Them Like Dogs” was one of the headlines in the Wired issue “The end of Code” from 2016. The dog training analogies inspired me to investigate the assumptions myself, and went on a quest to visit real dog trainers.

Observing training techniques, tools and interactions reviled a world full of inspiration and similarities to machine learning. The power of the dog analogy is that everyone can understand how this complicated technology works without any knowledge of programming.

Prototype 2 – Trainee v1

A prototyping tool that allows makers to train any input sensor and connect them to an output without any need to write code. Trainee can combine and cross multiple output pins to create a more complex training result.

The Trainee can be integrated into circuits or be used to make sense of advanced sensors for a simple output.

Prototype 3 – Trainee v2

A refined version of the trainee v1 as an open-source PCB-circuit for creating you own trainee board. This build is based on a small Teenzy microcontroller and comes with a digital interface called Coach.

The interface is now a single button and has 4 input and 4 outputs than can be trained and combined for more complex logic.

Prototype 4 – Intern

An extension for the Trainee v1 to control devices as the output pin. The Intern has a power outlet with a relay so the Trainee v1 could train objects with 230V.

Its purpose was to invite non-makers and average consumer to manipulate objects they can relate to and inspire custom problem-solving in their own contexts.

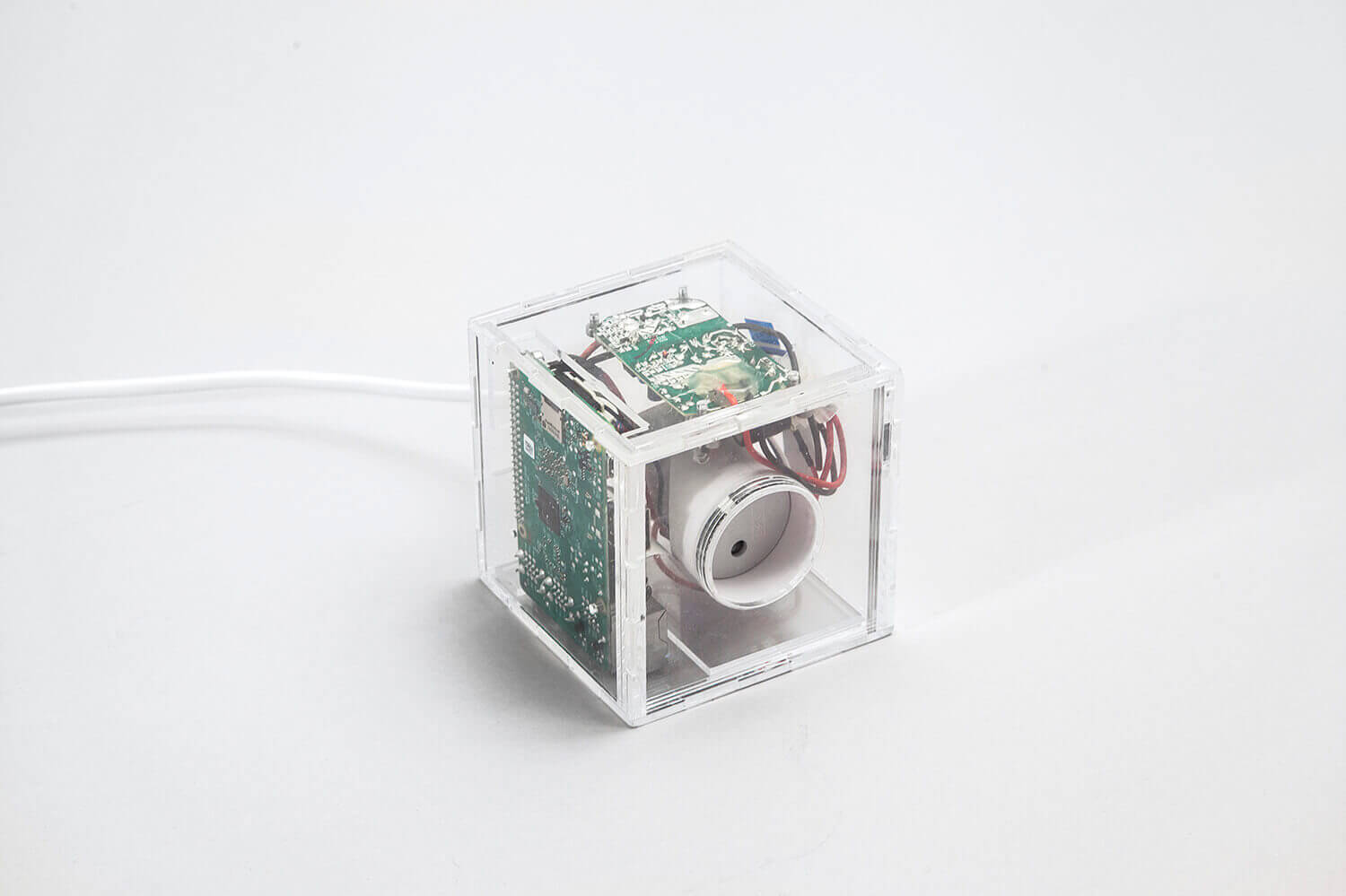

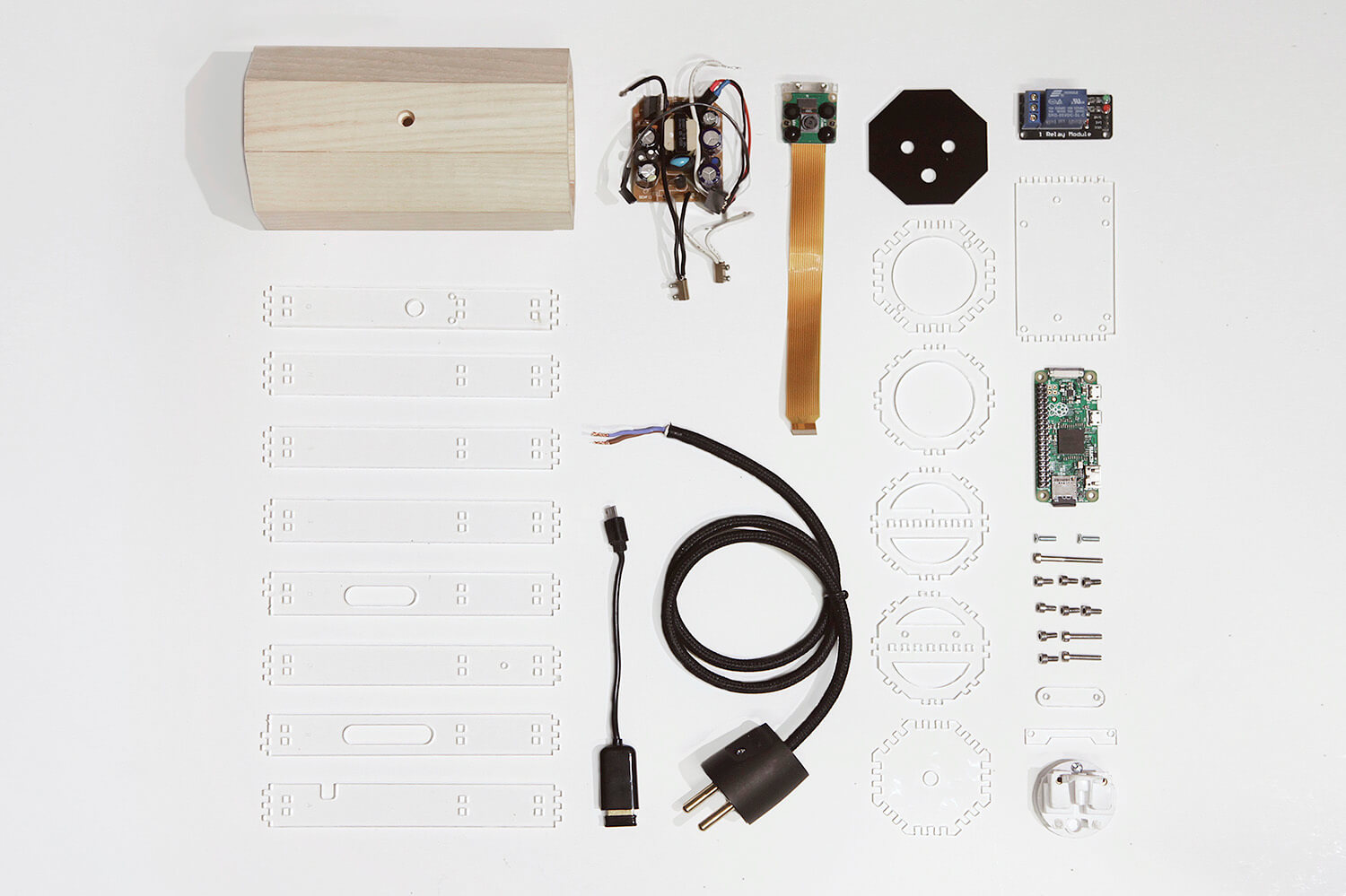

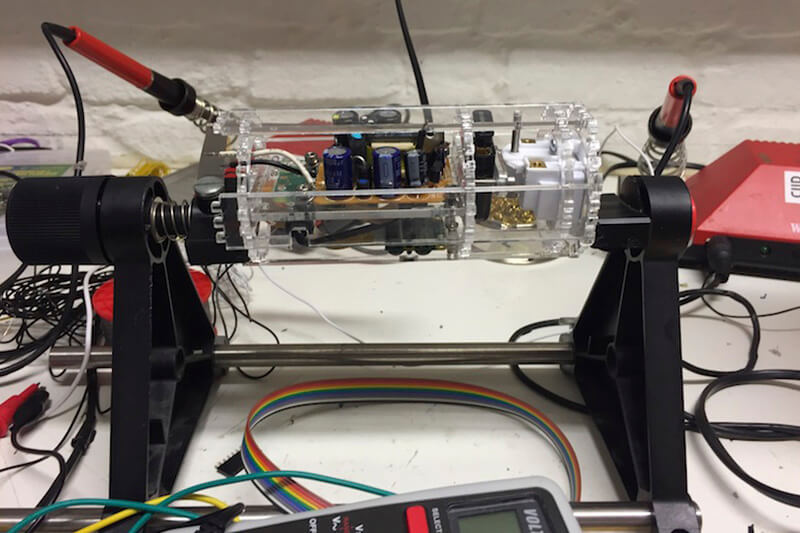

Prototype 5 – Apprentice

Designed to combine all the learnings from the previous prototypes in one device. Apprentice uses computer vision as sensor input and can be controlled wirelessly from a mobile app where feedback is given.

With a raspberryPi 3 as its brain it runs a custom server to connect the app and neural network. Any domestic device can be plugged into the Apprentice learn on your command.

Prototype 6 – Objectifier

A smaller, friendlier and smarter version of the Apprentice. It gives an experience of training an intelligence to control other domestic objects. The system can adjust to any behaviour or gesture. Through the training app the Objectifier can learn when

it should turn another object on or off. By combining powerful computer vision with the right machine learning algorithm the program can learn to understand what is sees and what behavior triggers what.

Special thanks to:

Ruben van der Vleuten, David A. Mellis, Francis Tseng, Patric Hebron and Andreas Refsgaard

Software used:

openframeworks, Processing, Wekinator, ml4a, node.js and p5.js

Selected Works

Project AliasProject type

Terraform TableProject type

Occlution GrotesqueProject type

TrajectoriesProject type

PyrographProject type

ParagraphicaProject type

ObjectifierProject type

UAE PavillionProject type

CIID NetworkProject type

News GlobusProject type

Discharge NoiseProject type

AbstractProject type